20071018

20071015

20070920

Needles!

It was probably the first time anyone had handed this tattoo artist a copy of The Human Brain Coloring Book as reference material, but this didn't seem to be a career milestone. He liked that it was braaainnnzz, though. So did some of the other artists. They kept stopping in to hold conversations, many consisting (so far as I could tell, with my head mashed into the chair) of "Ooh, it's a braaainnnzz, eh?"

It was probably the first time anyone had handed this tattoo artist a copy of The Human Brain Coloring Book as reference material, but this didn't seem to be a career milestone. He liked that it was braaainnnzz, though. So did some of the other artists. They kept stopping in to hold conversations, many consisting (so far as I could tell, with my head mashed into the chair) of "Ooh, it's a braaainnnzz, eh?"

The white things are neurons; the quote is from Hilbert the year before Goedel published his Incompleteness Theorem. (Likewise I have answers to "zomg wedding dress," "zomg AIDS," and "wtf," although the last is a bit involved.) The only unfortunate thing at this point is the total failure of such an endeavor to generate a dinner story. Ah well. Perhaps indirectly.

20070914

Conformal maps on photography

I just found a Flickr set with some cool examples of applying conformal maps to photography.

20070911

20070909

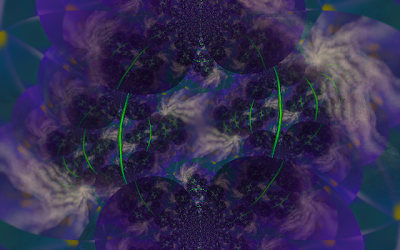

Fractal neurofeedback

20070903

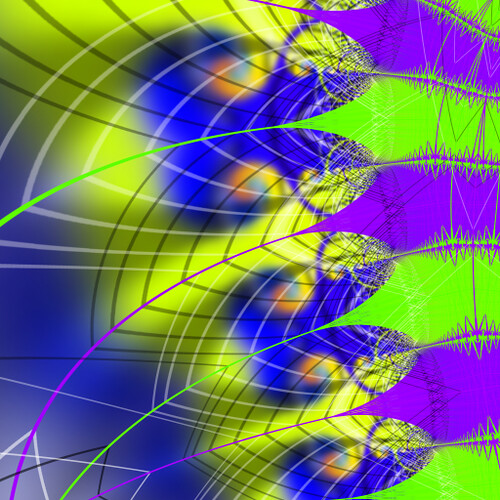

More screenshots

Here's the latest.

Edit: I've added some more screenshots to the gallery, with tasty vertical symmetry imposed by mirroring.

Here I'm trying out some different maps, and also incorporating a camera feed, which is what gives it the more fuzzy, organic look. The geometric patterns with n-way radial symmetry come from z' = z*c, which gives simple scaling and rotation. The squished circles come from z' = sin(real(p) + t) + i*sin(imag(p)), where p = z^2 + c and t is a real parameter.

Posted by

Keegan

at

3.9.07

3

comments

![]()

Labels: art, fractals, graphics, media art, strange loop

20070901

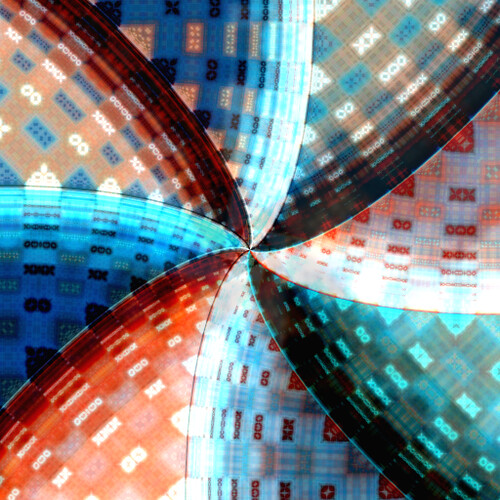

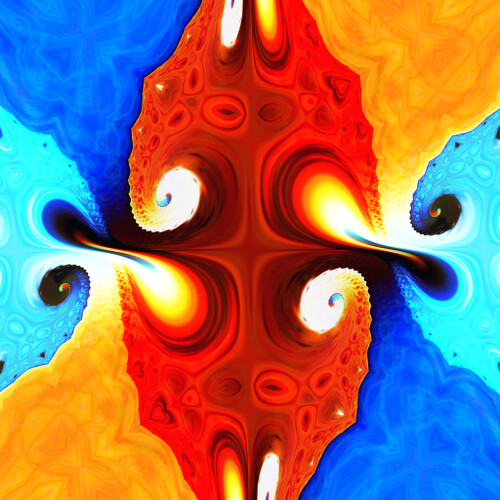

More fractal video feedback

I've been working on a new implementation of the fractal video feedback idea. Unlike the previous attempts, the code is nice and modular, so complicated bits of OpenGL hackery get encapsulated in an object with a simple interface. It's still very much a work in progress, but I thought I'd share some results now. Feedback (no pun intended) is very much appreciated.

Video:

Shoving the video through the YouTubes kills the quality. I have some higher quality screenshots in a Flickr gallery. Some of my favorites:

The basic idea is the same as Perceptron: take the previous frame, map it through some complex function, draw stuff on top, repeat. In this case, the "stuff on top" consists of a colored border around the buffer that changes hue, plus some moving polygons that can be inserted by the user (which aren't used in the video, but are in some of the stills). In these examples, the map is a convex combination of complex functions; in the video it's z' = a*log(z)*c + (1-a)*(z2+c). Here z is the point being rendered, z' is the point in the previous frame where we get its color, c is a complex parameter, and a is a real parameter between 0 and 1.

There are two modes: interactive and animated. In interactive mode, c and a are controlled with a joystick (which makes it feel like a flight simulator on acid). The user can also place control points in this (c,a) space. In animated mode, the parameters move smoothly between these control points along a Catmull-Rom spline, which produces a nice C1 continuous curve.

The feedback loop is rendered offscreen at 4096x4096 pixels. Since colors are inverted every time through the loop, only every other frame is drawn to the screen, to make it somewhat less seizuretastic. At this resolution, the system has 48MB of state. On my GeForce 8800GTS I can get about 100 FPS in this loop; by a conservative estimate of the operations involved, this is about 60 GFLOPS. I bow before NVIDIA. Now if only I had one of these...

There are two modes: interactive and animated. In interactive mode, c and a are controlled with a joystick (which makes it feel like a flight simulator on acid). The user can also place control points in this (c,a) space. In animated mode, the parameters move smoothly between these control points along a Catmull-Rom spline, which produces a nice C1 continuous curve.

The feedback loop is rendered offscreen at 4096x4096 pixels. Since colors are inverted every time through the loop, only every other frame is drawn to the screen, to make it somewhat less seizuretastic. At this resolution, the system has 48MB of state. On my GeForce 8800GTS I can get about 100 FPS in this loop; by a conservative estimate of the operations involved, this is about 60 GFLOPS. I bow before NVIDIA. Now if only I had one of these...

Posted by

Keegan

at

1.9.07

4

comments

![]()

Labels: art, fractals, graphics, media art, strange loop

20070817

David Darling: Equations of Eternity

Subtitle: "Speculations on Consciousness, Meaning, and the Mathematical Rules that Orchestrate the Cosmos." In my defense for checking it out of the library, the subtitle of Crick's Astonishing Hypothesis is "The Scientific Search for the Soul," and that was the publisher's fault.

It turns out, though, to be just as amusing as the subtitle would suggest. In particular, it contains the first serious exposition of the homunculus fallacy I've ever read:

Not least, the forebrain serves as the brain's "projection room," the place where sensory data is transformed and put on display for internal viewing. In our case, we are (or can be) actually aware of someone sitting in the projection room. But the fish's forebrain is so tiny that it surely possesses no such feeling of inner presence. There is merely the projection room itself, and a most primitive one at that.This occurs, thankfully, on page 7; and it's a determined reader who's made it through manglings of the Heisenberg uncertainty principle and the word "evolve." But if you can stomach any more of this guy I'd bet the rest of the book is hilarious.

On a side note I don't know if the following argument makes any sense:

Say you have a perfect digital model of a finite universe containing conscious beings. Assume anything that appears random in our world (i.e. exact positions of subatomic particles) may be modeled as pseudorandom. So we have an extrinsically explicit representation of the world, but not identity; characteristics of one particle are represented by the states and relationships of many other particles. The way the data is organized, from our point of view as programmers, can't possibly make the difference between whether the simulated creatures are actually conscious or not. Either they are, and perhaps we should consider the ethics of writing murder mysteries, or they are not, and there is something very special about the most efficient form of information storage. Or our universe isn't finite and so we don't have to care.

20070815

Arduino and ultrasonic rangefinders

If you've been following new media art blogs at all, you've probably heard of Arduino. Basically, it puts together an AVR microcontroller, supporting electronics, USB or serial for programming, and easy access to digital and analog I/O pins. The programming is very simple, using a language almost identical to C, and no tedious initialization boilerplate (compare to the hundreds of lines of assembly necessary to get anything working in EE 51). This seems like a no-hassle way to play with microcontroller programming and interfacing to real-world devices like sensors, motors, etc.

Another cool thing I found is the awkwardly named PING))) Ultrasonic Rangefinder. It's a device which detects distance to an object up to 3 meters away. A couple of these strategically placed throughout a room, possibly mounted on servos to scan back and forth, could be used for crowd feedback as we've discussed here previously. They're also really easy to interface to.

Update: I thought of a cool project using these components plus an accelerometer, in a flashlight form factor. The accelerometer provides dead-reckoning position; with rangefinding this becomes a coarse-grained 3d scanner, suitable for interpretive capture of large objects such as architectural elements (interpretive, because the path taken by the user sweeping the device over the object becomes part of the input). I may not be conveying what exactly I mean or why this is cool, but this is mostly a note to myself anyway. So there.

20070814

Revelation

Earlier this week, I experienced a religious revelation.

I was reading a truly depressing article about the economy in the NYT and listening to Take a Bow by Muse, and God talked to me. I felt a tingling of strange energy, and I felt a urge to stand up. I stretched out my arms, looking up towards some strange, invisible light. I began to tremble, and as the song hit its climax, I fell into my bed, and received this message.

"We are fucked. We have put our faith in a system which is smoke and mirrors, and it is falling apart. All debts are being called in, and we cannot cover them. You were too clever by half, and nows its going to fuck you up."

"Switch your portfolio to gold and guns."

Fortunately, I am an atheist, and don't have to listen to God.

20070725

Extraction of musical structure

I think my next big project will involve automatically extracting structure from music. Mike and I had some discussions about doing this with machine learning / evolutionary algorithms, which produced some interesting ideas. For now I'm implementing some of the more traditional signal-processing techniques. There's an overview of the literature in this paper.

What I have to show so far is this:

This (ignoring the added colors) is a representation of the autocorrelation of a piece of music ("Starlight" by Muse). Each pixel of distance in either the x or y axis represents one second of time, and the darkness of the pixel at (x,y) is proportional to the difference in average intensity between those two points in time. Thus, light squares on the diagonal represent parts of the song that are homogenous with respect to energy.

The colored boxes were added by hand, and represent the musical structure (mostly, which instruments are active). So it's clear that the autocorrelation plot does express structure, although at this crude level it's probably not good enough for extracting this structure automatically. (For some songs, it would be; for example, this algorithm is very good at distinguishing "guitar" from "guitar with screaming" in "Smells Like Teen Spirit" by Nirvana.) An important idea here is that the plot can show not only where the boundaries between musical sections are, but also which sections are similar (see for example the two cyan boxes above).

The next step will be to compare power spectra obtained via FFT, rather than a one-dimensional average power. This should help distinguish sections which have similar energy but use different instruments. The paper referenced above also used global beat detection to lock the analysis frames to beats (and to measures, by assuming 4/4 time). This is fine for DDR music (J-Pop and terrible house remixes of 80's music) but maybe we should be a bit more general. On the other hand, this approach is likely to improve quality when the assumptions of constant meter and tempo are met.

On the output side, I'm thinking of using this to control the generation of flam3 animations. The effect would basically be Electric Sheep synced up with music of your choice, including smooth transitions between sheep at musical section boundaries. The sheep could be automatically chosen, or selected from the online flock in an interactive editor, which could also provide options to modify the extracted structure (associate/dissociate sections, merge sections, break a section into an integral number of equal parts, etc.) For physical installation, add a beefy compute cluster (for realtime preview), an iPod dock / USB port (so participants can provide their own music), a snazzy touchscreen interface, and a DVD burner to take home your creations.

20070718

Simple DIY multitouch interfaces

OpenCV : open-source computer vision

OpenCV is an open source library from Intel for computer vision. To quote the page,

"This library is mainly aimed at real time computer vision. Some example areas would be Human-Computer Interaction (HCI); Object Identification, Segmentation and Recognition; Face Recognition; Gesture Recognition; Motion Tracking, Ego Motion, Motion Understanding; Structure From Motion (SFM); and Mobile Robotics."

Sounds like some of this could be pretty useful for interactive video neuro-art, or whatever the hell it is we're doing.

20070717

What if everything in the past has been a long string of coincidences. Where we observe and infer the law of gravity it is just a coincidence that all those times stuff fell down. Balls flying through the air could have turned left, but they always happened to go straight. All natural laws could just be a highly improbable string of events.

In an entirely different but similar fiction:

Our universe appears to be free of contradictions. What if the many worlds hypothesis were true and there are often branches where some inherent contradiction occurs. These collapse/explode/disappear as the they occur and by the anthropological principal we only see consistent branches.

20070715

Whorld : a free, open-source visualizer for sacred geometry

From the homepage:

"Whorld is a free, open-source visualizer for sacred geometry. It uses math to create a seamless animation of mesmerizing psychedelic images. You can VJ with it, make unique digital artwork with it, or sit back and watch it like a screensaver."

Flock

From the artist's page:

Flock is a full evening performance work for saxophone quartet, conceived to directly engage audiences in the composition of music by physically bringing them out of their seats and enfolding them into the creative process. During the performance, the four musicians and up to one hundred audience members move freely around the performance space. A computer vision system determines the locations of the audience members and musicians, and it uses that data to generate performance instructions for the saxophonists, who view them on wireless handheld displays mounted on their instruments. The data is also artistically rendered and projected on multiple video screens to provide a visual experience of the score.

Perhaps you've already seen it, but I really like aleatoric music.

20070627

Idea: music visualization with spring networks

The basic idea is to connect a collection of springs into an arbitrary graph, then drive certain points in this graph with the waveform of a piece of music (possibly with some filtering, band separation, etc.) This could be restrained to two dimensions or allowed unrestricted use of three.

Spring constants could be chosen so the springs resonate with tones in the key of the piece. Choosing these constants and the graph connectivity to be aesthetically pleasing would likely be an art form in of itself. A good starting point would be interconnected concentric polygonal rings of varying stiffness. Symmetry seems like a must.

For a software implementation, a useful starting point would be CS 176 project 5; a cloth simulator that considers only edge terms is essentially a spring-network simulator. There are many ways to render the output; for example, draw nodes with opacity proportional to velocity, and/or draw edges with opacity proportional to stored energy. Use saturated colors on a black background, and render on top of blurred previous frames for a nice trail effect. Since I've already coded the gnarly math once, I might try to throw this together tomorrow evening, if I don't get distracted by something else.

The variations are really endless. For example, with gravity and stiff (or entirely rigid) edges, you could make a chaos pendulum. By allowing edges to dynamically break and form based on proximity and/or energy, you could get all kinds of dynamic clustering behavior, which might look like molecules forming or something.

A hardware implementation (i.e., actual springs) would be badass in the extreme, although I imagine it would be finicky to set up and tune.

Idea: immersive video with one projector

This is an idea I had while lying in bed listening to Radiohead and hallucinating. (I was perfectly sober, I swear. The Bends is just that damn good.)

Build a frame structure (out of PVC or similar) with the approximate width/depth of a bed, and height of a few feet -- enough that you could comfortably lie on a mattress inside and not feel claustrophobic. Cover every side with white sheets, drawn taut. Mount a widescreen projector directly above the middle of this structure, pointing down. Then hang two mirrors such that the left third of the image is reflected 90 degrees to the left and the right third is reflected 90 degrees to the right (from the projector's orientation), with the middle third projecting directly onto the top of the frame. Then use more mirrors to get the left and right images onto the corresponding sides of the frame. (You'd probably also need some lenses to make everything focus at the same time; this is the only part I'm really iffy on. Fresnel lenses would probably be a good choice. Anyone who knows optics and has any idea how to set this up, please let me know.)

Anyway, the beauty of this setup is that it allows one to control nearly the whole visual field with a single projector and a single video output, thus minimizing complexity and expense. It's not hard to set up OpenGL to render three separate images to three sections of the screen; they could be different viewpoints in the same 3D scene, although as usual I'm more interested in the more abstract uses of this. In particular, you get control over both central and peripheral vision, which has psychovisual importance.

I'm really tempted to build this when I get back to Tech, but there's a high probability that someone else's expensive DLP projector will suffer an untimely demise at the hands of improvised mounting equipment.

Edit: I thought of an even simpler setup that does away with the mirrors and lenses. Make the enclosure a half-cylinder, and project a single widescreen image onto it (orienting left-right with head-feet), correcting for cylindrical distortion in software. The major obstacle here is making a uniformly cylindrical projection surface, but that shouldn't be too hard.

Posted by

Keegan

at

27.6.07

2

comments

![]()

Labels: graphics, hardware, ideas, optics, recreational neuroscience, vision

20070615

Sesnsory Deprivation, a fucking ripoff

I tried the sensory deprivation experiment this afternoon. I prepared by shutting off my room, lights off, door closed, shades down, and so on. I then lay on my bed, covered my eyes with a mask, and put earplugs on, checked my crashbox, and went exploring.

Initial preparations were encouraging, as I felt a sensitivity in my skin and tinnitus like effects. I experienced some closed eye visual along with a sudden suppression of conscious thought at an estimate 20 minutes. However, not more became of this, and I exited the experiment at BLT 1:30 when I realized I had fallen asleep and was dreaming. Because I could not check to see if my crashbox was recording in the dark, all actual data was lost. The only linger effects were extreme difficult writing this post, which may be attributed to just having woken up.

My dreams involved being trapped in the Dabney computer lab with a couple of nerds arguing about the best way to install a cracked version of CnC 3 on the lab machines, so sensory deprivation may hold promise as a method of torture.

--Biff-"sticking to chemistry"-motron

20070602

I am an RSS feed classifier

Thanks to Google Reader, I now spend much of my time trying to keep up with an unbelievable flood of information from the blogosphere. I'm now tagging the stuff I find interesting; there's also a RSS feed of that. I'm working through a large backlog right now, so it will be moving fast.

I know I'm late at getting into it, but the ideas behind this blogging / RSS / Web 2.0 stuff are really cool -- everyone produces a little bit of content while filtering and correlating other content. Of course, this is the same thing humans have been doing for millennia, but we're reaching unprecedented levels of participation, connectivity, and information processing rate. How far can this growth continue before we start to see fundamental shifts in the nature of human thought -- or have these shifts already occurred, maybe out of sight of our limited perspective? Maybe the development of language was such a shift, and we've just been pushing the performance of that development ever higher.

20070530

20070519

Kentucky Meine Heimat

Old Kentucky Land, bist meine Heimat.

Muss dich wiedersehn, einmal wiedersehn.

Old Kentucky Land, bist meine Heimat.

Dich und Rosemarie vergisst ein Cowboy nie.

(lyrics here)

I wish I could post the MP3, but for now, you'll either have to hunt down your own contraband copy of the large integer that encodes this unforgettable piece of cultural confusionism or use your imagination to find out want Volkstümliche Country would sound like. I love the Kingston Trio.

And of course, a vapid post would not be complete without a shameless plug, or a reference to the fact that the California Minimum Wage applies to all professions except sheepherding.

20070517

Botborg: more video feedback art

'Botborg is a practical demonstration of the theories of Dr Arkady Botborger (1923-81), founder of the 'occult' science of Photosonicneurokineasthography - translated as "writing the movement of nerves through use of sound and light". Botborg claim that sound, light, three-dimensional space and electrical energy are in fact one and the same phenomena, and that the capacity of machines to alter our neural impulses will bring about the next stage in human evolution.'

I like the concept, but it's kinda ugly. In a pure visual-aesthetics sense, I think we could do better. On the flip side, it's also nicely disturbing (it makes some nice growling noises).

On a related note, do any of you know anything about applying to art schools in new-media art? I have some idea of which schools I'd apply to, but no idea how to convince them to take me seriously (or indeed how to convince myself to take me seriously).

20070510

Survival Machines

Four thousand million years ago, what was to be the fate of these replicators? They did not die out, for they are past masters of the survival arts. But do not look for them floating loose in the sea. They gave up that cavalier freedom long ago. Now they swarm in huge colonies, safe inside gigantic lumbering robots, sealed off from the outside world, communicating with it by torturous indirect mean, manipulating it through remote control. They are in you and in me; they created us body and mind; and their preservation is the ultimate rational for our existence. They have come a long way, these replicators. Now they go by the name of genes, and we are their survival machines.

--Richard Dawkins

As semi-legitimate scientists (and not biologists), we probably all think that Dawkins is pretty damn cool. But it never hurts to be reminded of this fact. Dawkins views about evolution, and the role of the gene as the fundamental unit are some of the most sensible things I thing I've ever read.

As I continue reading The Selfish Gene, I will try to more fully develop my semi-bullshit theory of memetics. One of the things I believe is that we are very close to self replicating machine life, at which point the meme will surpass the gene as the fundamental unit of survival. Even if classic nano-replicators are impossible, increasingly more of the interesting activity on Earth is taking place in entirely virtual realms. Assuming the fundamental substrate survives, software will become increasingly complex, and free itself from using us as agents of its selection.

20070506

Flock: for saxophone quartet, audience participation, and video

This work incorporates several of the ideas we discussed here previously, relating to crowd feedback and such. Looks pretty cool.

20070503

More on VR for consciousness hacking

I was talking to Biff today about uses for various senses in the VR consciousness hacking idea. It occurred that smell is very low-bandwidth, but strongly tied to memory, and thus might be useful for maintaining state across multiple sessions.

Also, apparently Terence McKenna was also interested in using VR for similar purposes. I'm not sure if that makes the idea more or less credible.

In other news, the laser glove is about 80% done; all I need to do is wire it up. I need to talk to some sort of EE person about how to do this without exploding the lasers from overcurrent.

Posted by

Keegan

at

3.5.07

3

comments

![]()

Labels: input, notes, recreational neuroscience, smell, strange loop, vr

20070429

You weary giants of Roombas and broomsticks

Today, I was reading "I am a strange loop", and while immersed in a story about virtual presence, I realized that it would be really cool if you could build a telepresence robot out of, say, a Roomba, a broomstick, and a Macbook.

Some clever guys obviously beat me to it. Check out these. For those who don't want to bother clicking the link: it's a telepresence robot that looks like... well, not all that different from a Macbook on a broomstick on a Roomba. Note that they plan to sell these at "between $1800 and $3000" in "2008"; by my estimate, the lower end of that might be less than the cost of the parts you'd need to make one.

And here's another fun question: what is the interaction of telepresence and immigration laws? If I live in one country, but my job is "in" another, where am I legally employed? This question first became very real to me when I lived in the US as a student and wondered what the legal consequences would be if I, through the primitive telepresence technologies of e-mail, telephone and ssh, were to do a little free-lance work for a Dutch company in Holland, paid in Euros on my Dutch bank account, with the Dutch company possibly never even realizing I was in California? (I never did it, but an American I know here does do the exact opposite.)

And why shouldn't he? Given ever improved telepresence technologies (I am really starting to like that buzzword, even though it's probably already gone out of style), immigration laws start seeming not only backwards and selfish, but positively hilarious. And that last thing is a good thing, because in the end, the only way you can really fight The Man is to poke fun at Him...

I have more to say about the political implications of this, but meanwhile, you can ponder whether John Perry Barlow was onto something when he wrote this (emphasis mine):

Governments of the Industrial World, you weary giants of flesh and steel, I come from Cyberspace, the new home of Mind. On behalf of the future, I ask you of the past to leave us alone. You are not welcome among us. You have no sovereignty where we gather.

We have no elected government, nor are we likely to have one, so I address you with no greater authority than that with which liberty itself always speaks. I declare the global social space we are building to be naturally independent of the tyrannies you seek to impose on us. You have no moral right to rule us nor do you possess any methods of enforcement we have true reason to fear.

Governments derive their just powers from the consent of the governed. You have neither solicited nor received ours. We did not invite you. You do not know us, nor do you know our world. Cyberspace does not lie within your borders. Do not think that you can build it, as though it were a public construction project. You cannot. It is an act of nature and it grows itself through our collective actions.

20070428

RepRap : a self-replicating rapid prototyper

RepRap is a project to make a rapid prototyping machine (aka 3D printer) which can build most of its own parts, with a total cost of under $500. There are already several working prototypes, and they "hope to announce self-replication in 2008".

"RepRap etiquette asks that you use your machine to make the parts for at least two more... for other people at cost."

If this achieves the exponential growth that they're obviously aiming for, it will enable open source distributed development of physical objects (including of course itself), which would be nothing short of revolutionary.

And their canonical test object is a shotglass.

20070427

Manna

An interesting short story by futurist Marshall Brain. It has implausibilities, it's really preachy in places, and I dislike most futurists as a rule, but I thought it was thought-provoking enough to be worth reading. Opinions are welcome.

20070423

Microrobotically fabricated biological scaffolds for tissue engineering

My first paper credit! This was a bio-engineering project. We explored a new fabrication method for building submicron-scale fibrous constructs out of the biodegradable polymer polylactic acid (PLA).

Designing fibrous, biodegradable, patterned substrates is relevant for tissue engineering: they provide a mechanical

substrate to guide the structural development of the tissue. We cultured the mouse myoblast (muscle building) C2C12 cell line (which has been immortalized since 1977) on the constructs. The cells adhered to the fibers and replicated happily.

You can download the conference paper here.

We took some beautiful confocal and electron microscopy images:

Below is a false-color confocal image of the cells proliferating on the scaffold. The PLA fibers (blue) were imaged in brightfield. The α-tubulin-GFP fluorescence is in green, with fine structures highlighted in yellow.

Here are a few more fluorescence and electron microscopy images (click on thumbnail to view full size):

If you would like to reproduce or refer to these images, you can cite the paper as:

Nain, A.S., Chung, F., Rule, M., Jadlowiec, J.A., Campbell, P.G., Amon, C. and Sitti, M., 2007, April. Microrobotically fabricated biological scaffolds for tissue engineering. In Proceedings 2007 IEEE International Conference on Robotics and Automation (pp. 1918-1923). IEEE.

Posted by

M

at

23.4.07

0

comments

![]()

Labels: art, bioengineering, biology, c2c12, cell culture, confocal, fluorescence, gfp, microscopy, papers, pla, science, tissue engineering, α-tubulin

More on crowd feedback

Everyone has cellphones now, right? If you had a few highly directional antennae you might be able to use the amount of RF activity in a few cellphone bands as an approximation to crowd activity. You could maybe also look for Bluetooth phones and maybe remember individual Bluetooth ID's, although I'm not sure if most phones will respond to Bluetooth probes in normal operation.

Another approach would be suitable for a conference or other event where participants have badges. Simply put an RFID tag in each badge and have a short-range transceiver near each display. Now the art not only responds to aggregate preferences, but it also personalizes itself for each viewer. Effects which have previously held a participant's attention will appear more often when that participant is nearby. This will probably result in overall better evolutionary art -- instead of trying to impress the whole crowd, which is noisy and fickle, the algorithm tries to impress every individual person. While it's impressing one group, other people may be attracted in, and this constitutes more upvotes for that gene.

I think one important feature for this to work effectively is a degree of temporal coherence for a given display. If they're each showing short, unrelated clips (like Electric Sheep does), then people will not move around fast enough for the votes to be meaningful. Rather, each display should slowly meander through the parameter space, displaying similar types of fractal for periods on the order of 10 minutes (though of course there may be rapidly-varying animation parameters as well; these would not be parameters in the GA, though their rates of change, functions used, etc. might be).

20070422

Idea : fractally compressed AR

This is an augmented reality idea I had while walking around looking at trees after Drop Day. Basically, one would wear a VR headset that displays imagery from the outside world, except that occurrences of similar visual objects get replaced with the exact same object, or the same object perturbed in some synthetic way.

So, for example, the leaves of a tree would get replaced with fractals that are generated to look like leaves. As another example, areas of the same "texture" could be identified (basically, areas with little low-frequency spatial component, possibly after a heuristically determined perspective correction). Then a random small exemplar patch is selected and used to fill the entire area with Wei & Levoy / Ashikhmin-style synthetic textures.

The point of all of this is that you're essentially applying lossy compression (by identifying similar regions and discarding the differences between them), then decompressing and feeding the information into the brain (and thus mind). Working on the assumption that consciousness essentially involves a form of lossy compression which selects salient features and attenuates others, you can determine the degree and nature of this compression by determining when a similar, externally applied compression becomes noticeable or incapacitating.

My guess is that there will be a wide range of compression levels where reality is still manageable and comprehensible but develops a highly surreal character. Of course to experiment meaningfully you'd need a good enough AR setup that the hardware itself doesn't introduce too much distortion, although you could also control for this by having people use the system without software distortions.

Posted by

Keegan

at

22.4.07

0

comments

![]()

Labels: ar, fractals, graphics, ideas, optical illusions, recreational neuroscience, tessellations, vr

The McCollough effect: a high-level optical illusion

See here for a demonstration, if you're not familiar. It seems like an afterimage effect at first, but can last for weeks, apparently affects direction-dependent edge detection in V1, and correlates with extroversion. Weird, eh?

Posted by

Keegan

at

22.4.07

0

comments

![]()

Labels: graphics, optical illusions, recreational neuroscience

BLIT : a short story

BLIT: a short story by David Langford

BLIT: a short story by David Langford

Terrifyingly relevant to what Mike and I are working on.

"2-6. This first example of the Berryman Logical Image Technique (hence the usual acronym BLIT) evolved from AI work at the Cambridge IV supercomputer facility, now discontinued. V.Berryman and C.M.Turner [3] hypothesized that pattern-recognition programs of sufficient complexity might be vulnerable to "Gödelian shock input" in the form of data incompatible with internal representation. Berryman went further and suggested that the existence of such a potential input was a logical necessity ...

... independently discovered by at least two late amateurs of computer graphics. The "Fractal Star" is generated by a relatively simple iterative procedure which determines whether any point in two-dimensional space (the complex field) does or does not belong to its domain. This algorithm is now classified."

What do you think the odds are that we make something like this?

Posted by

Keegan

at

22.4.07

2

comments

![]()

Labels: fiction, graphics, optical illusions, perceptron, recreational neuroscience, vision

idea : VR for consciousness hacking

Ooh, interpolating tessellations is an awesome idea. You'd basically have to interpolate under a constraint, that some parts of the spline line up with other parts. But since this constraint is satisfied at all reference points, I think it would be doable.

I've been thinking lately about virtual reality as a tool for consciousness hacking. VR as played out in the mid-90's was mostly about representing realistic scenes poorly and at great expense. But I think we can do a lot with abstract (possibly fractal-based) virtual spaces, and the hardware is much better and cheaper now. The kit I'm imagining consists of:

- 3D stereoscopic head-mounted display with 6DOF motion tracker (like this)

- High-quality circumaural headphones (like these)

- Homemade EEG (like this)

- Possibly other biofeedback devices (ECG, skin resistance, etc.)

- Intuitive controllers (e.g. data glove like this, camera + glowing disks for whole-body motion-tracking, etc.)

- A nice beefy laptop with a good graphics card

- Appropriate choice of alphabet soup and related delivery mechanism, if desired

- A locking aluminum equipment case with neat foam cutouts for all of the above

Posted by

Keegan

at

22.4.07

![]()

Labels: audio, graphics, ideas, input, recreational neuroscience, strange loop, vr

20070421

Idea : crowd feedback

This is another idea relating to video feedback systems. Imagine an exhibition of a system like Perceptron on several monitors throughout a gallery space. A set of cameras watches the crowd from above, and uses simple frame differencing and motion detection algorithms to determine a map of activity level across the space. This then feeds into the video system; perhaps each region of the space is associated with some IFS function, seed point, or color, and the activity level in that region determines how prominently that feature affects the images.

Each monitor can display a different view of the same overall parameter space, so at any given time there will be some "interesting" and some "boring" monitors. Viewers are naturally drawn towards more "interesting" (by their own aesthetic senses) monitors, and in moving to get a better look they affect the whole system. In essence, the aesthetic preferences of the viewers (now participants) become another layer of feedback.

If Hofstadter is right, and "strange loops" give rise to conscious souls, then should the participants in such an exhibition be considered as having (slightly) interconnected souls? If so, how does the effect compare in magnitude to the interconnectedness we all share through the massive feedback loop of the everyday world? Does this effect extend to the artist who makes the system and then sits back and watches passively? What about the computer that's making it all happen? Is any of this actually "strange" enough to be considered a strange loop? All of these questions seem fantastically hard to answer, but it's a lot of fun to think about.

Posted by

Keegan

at

21.4.07

0

comments

![]()

Labels: graphics, ideas, media art, philosophy, strange loop

Idea : the laser glove

This is an idea I had for a simple, dirt-cheap glove-based input device, for intuitive control of audio/video synthesizers like Perceptron and such. It consists of a glove with a commodity red laser pointer oriented along each finger. This allows the user to control the shape, size, and orientation of a cluster of laser dots on a screen. A webcam watches the screen and uses the dots to control software.

The software could do any of a number of things. One approach would be to fit the dot positions to a set of splines, then use properties of these splines such as length, average direction, curvature, etc. as input parameters to the synthesizer system. At Drop Day we had a lot of fun pointing lasers at feedback loops, and that was without any higher-level processing.

Laser pointers are now less than $1.00 each, bought in bulk on eBay. (I have several dozen in my room right now.) I don't know of a good way to differentiate one dot or user from another without adding a lot of complexity, but I think cool effects could be had by just heuristically detecting dot formations. The emergent behavior from multiple interacting users might be desired, anyway.

On a slightly related note, here's some guy's MIT Media Lab thesis that I found while browsing the Web one fine day: Large Group Musical Interaction using Disposable Wireless Motion Sensors

20070416

I'm on the Interblag!

I finally got around to writing something for this blog. First, I have a quotation from our beloved Admiral:

"Do you want this fire extinguisher? No? How about this tag that says 'do not remove under penalty of fire marshal'?"

Second, the Perceptron screenshots on here look really damn good, and I decided I should post some of what I've been working on lately as well. This started yesterday as a CS 101 assignment and kind of got out of control.

There's also a movie here of me playing with it. It's 56M and still looks shitty due to compression, but you can at least get the idea. May cause seizures in a small percentage of the population.

There's also a movie here of me playing with it. It's 56M and still looks shitty due to compression, but you can at least get the idea. May cause seizures in a small percentage of the population.

Update: The video is (maybe) on YouTube now:

20070409

Jesus Died For Somebody's Sins, But Not Mine

In my college tour of doom, the first school I had the joy of visiting was Hampshire. My good friend Dusty goes there, and she told me to get in early, because Sunday was their annual Easter Keg Hunt, a magical time where students from all over the 5 colleges come to Hampshire to get their drunk on.

I arrived at 1:45, called my contact Dusty, and she immediately handed me a mug full of delicious high quality beer that tasted of blueberries and love. We went back to her place, where they had a keg of the stuff in the shower. Several glasses later, I found myself in an another apartment with a massive stoner circle of hippies, passing around Isaac Haze, one of the the largest, most powerful bongs I have ever experienced. I ran into a guy who'll I'll call Dave, a Physics/Math double major who "took a lot of acid, and realized that reality was an illusion, and that only through insanity could we find the truth." Generally, one of the crazier people I've met.

Shortly thereafter, a bunch of us went for a walk in the woods. We had only got 20 feet in when we found another keg. After more drinking and wandering, I got cold, and headed back to Dusty's place. Inside were a bunch of U Mass students, and some hot Hampshire artists, who were passing around these truly epic blunts and playing Mario Kart 64. After 3 of those deadly fatties, I felt the tiredness coming on, and I passed out on Dusty's floor.

All in all, a truly epic day.

20070405

20070315

Kelso Dunes

There are wonders all around us, go out and find them.

Subscribe to:

Posts (Atom)