BIG UPS!

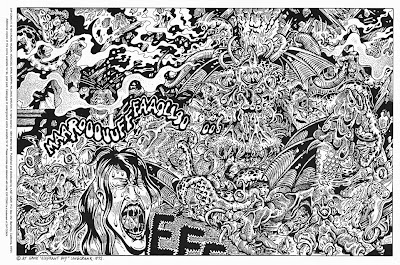

fig.A - Big Ups #1 back cover - "Our Hero" (stop staring.)

"Pop culture used to be like LSD – different, eye-opening and reasonably dangerous. It’s now like crack – isolating, wasteful and with no redeeming qualities whatsoever" --Peter Saville

Big Ups is a way of pushing the context of comics. At first its a simple gesture - email your friends, get some really great art together, put it in a hand made book, release a cd with it, and get it out there one way or the other. On the other hand, the simple, do it yourself nature of it all is more political, I think, then saying, outright, "this is a political gesture". The diversity of the art married to the simple fact that "comics are what make you smile" - what does that mean for kids who want to see something different? For us, I think the unexplainable is way more fun then a one-liner telling you where to go.

This is where we turn to the back cover, featuring a stylish headshot of our hero, Glenn Beck (see fig. A). It's a simple experiment involving an obviously bad political influence (in the sense that he's simply obtuse), transformed into a mandala-like shrine to obfuscation of priority. That's why its going on the back cover, unexplained, utterly beautiful and strange - it represents a 'beyond' of political discourse. The image itself is simply entertaining, but also confusing as hell. The act of fucking with an image of Glenn Beck (or Bill O' Reilly, or Jon Stewart, for that matter) is like burning an effigy that looks nothing like the subject. Plenty of people want to lay into Our Hero for being an idiot, but personally I don't have time to even concern myself with the nonsense that leaves his mouth. In other words, the mere act of transforming a likeness of Glenn Beck into a psychedelic icon/monster is an exercise in political discourse that aims to make political discourse obsolete. It represents the purpose of Big Ups, or anything anyone makes themselves - it demonstrates that we're doing just fine with or without Our Hero.

Peter Saville's apt quip reverse engineers the idea nicely - essentially we are taking crack-like pop culture and turning it into a moderately valuable form of cultural LSD, in that it is different, it is confusing, it is associated with the practice of creating situations for yourself again, rather than having it mindlessly sold to you.

Underground comics in the sixties and seventies did this all the time - ZAP! for example (see Fig.B), was famous for it, making drug references seem as common as breathing, and featuring horrifyingly(and entertainingly!) detailed graphic scenes of sexual and/or violent natures (Fig.C), or art that was too confusing and gruesome and difficult to be considered acceptable by the mainstream (Fig.D). It was art that sought beauty where others saw filth. It was art for people who got it - anyone who didn't was encouraged to expand their consciousness to do so, or get the fuck out. MAD magazine/National Lampoon were also famous for doing stuff like that, but they also took the more hard-hitting, easy to understand route, making direct parodic shots at political figures, pop culture icons/events, etc. that the mainstream could latch onto more easily. I'm partial to stuff like ZAP! for the very reason that it made no sense whatsoever. It reminds me that life is a little more complicated than following people like Glenn Beck on their journey towards ultimate stupidity.

Underground comics in the sixties and seventies did this all the time - ZAP! for example (see Fig.B), was famous for it, making drug references seem as common as breathing, and featuring horrifyingly(and entertainingly!) detailed graphic scenes of sexual and/or violent natures (Fig.C), or art that was too confusing and gruesome and difficult to be considered acceptable by the mainstream (Fig.D). It was art that sought beauty where others saw filth. It was art for people who got it - anyone who didn't was encouraged to expand their consciousness to do so, or get the fuck out. MAD magazine/National Lampoon were also famous for doing stuff like that, but they also took the more hard-hitting, easy to understand route, making direct parodic shots at political figures, pop culture icons/events, etc. that the mainstream could latch onto more easily. I'm partial to stuff like ZAP! for the very reason that it made no sense whatsoever. It reminds me that life is a little more complicated than following people like Glenn Beck on their journey towards ultimate stupidity.(note - please see fig. E for the Big Ups back cover's direct inspiration)